For some reason, we now have automated art

It felt like just a matter of time before computers would learn to beat humans at chess and use language in a convincing way. This feels like natural progression for technology. But suddenly computers are painting and writing poetry too. I have to admit, I didn’t expect that.

Art is nowhere near the first industry to be hit by automation, but many of us were caught off guard by it. Technology is supposed to make our lives easier by freeing us from tedious labour. At least that’s how it’s sold to us, and in some cases it really does. A robot vacuum cleans our floors every morning at 9:00 am, and it needs no supervision. I love it! More robots like that, please!

That’s the kind of automation I think most of us have in mind when we imagine a high tech future. But automated art? Uh… who asked for that? Wasn’t that what we were supposed to do with all the free time given to us by automation?

Is my career over?

I already see AI generated art showing up in blogs and articles about soil life. Areas where myself or another human artist likely would have provided the work before (knowingly or not). For a while it felt like everyone was getting swept up in the AI hype and using it at every possible opportunity. I was caught up in the hype too, but not in a good way. All I could think of was how a human could possibly compete with this. These bots use the combined skills of thousands of artists to produce images in a matter of seconds. In future, perhaps only the most elite artists will be able to make a living from their work.

I floundered in this vague feeling of despair and uncertainty for months, watching AI break into every creative discipline that can be done on a computer. The hype was real, and so was the backlash. I started wondering if I needed to give up and either embrace these tools and learn to use them, or rethink my career path entirely.

With this in mind, I decided to set my feelings aside and test some of these tools out with an open mind to see if they really could do my job or make my work easier in some way.

Let’s see what it can do

We can start with Fooocus, which is based on Stable Diffusion. It’s free, easy to use, and works locally using your own GPU. There are no credit limits or restricted premium features. It uses simple text prompts and has a UI that lets you customize styles and settings without having to learn any complex prompting techniques.

Since I mainly draw microbes and my artwork is used most often in the context of soil life, that’s what I focused on with my prompts.

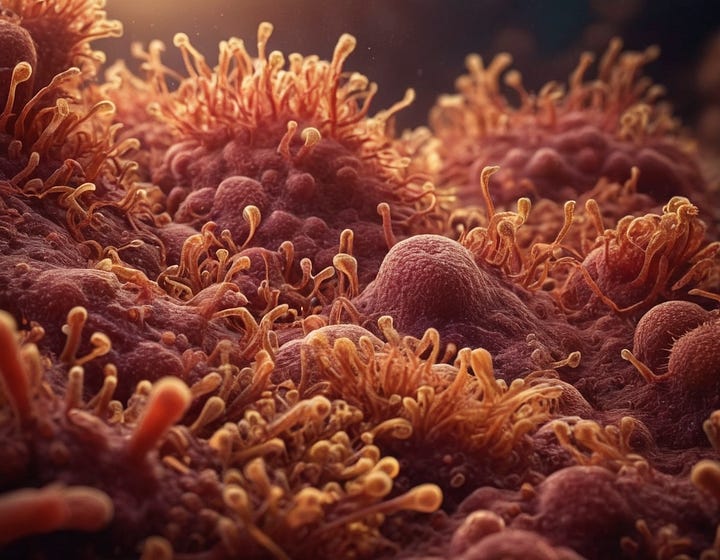

To start with, I asked it to draw protozoa in soil:

Okay, this is cool! The colours and textures work well, the composition is nice, and I like it overall. But… what is it?

The prompt was simply “protozoa in soil”, and I used a style called MRE Underground, which seems like a good way to get images that look like they’re actually in the soil instead of on the surface.

It looks like the AI was inspired by jellyfish to make this… creature. This is interesting because I often look at photos of jellyfish when trying to understand how light travels through soft translucent membranes underwater. It’s not a perfect analogy but it helps a lot. It’s fascinating to see the AI make the same connection.

But okay, as pretty as it is, that image isn’t useful. It’s meaningless. Maybe the prompt was too vague? Let’s try something a little more specific.

“Ciliates in soil” reminds me of those macro photos of eyes. It looks microbe-ish, but it’s definitely not a ciliate. If anything it looks more like some sort of mold. Is the prompt still to vague? Maybe I need to tell it more specifically which organism to draw.

How about one most people are familiar with, like an amoeba. There should be plenty of amoeba images out there…

Okay, wow. It’s definitely reminiscent of an amoeba, but it’s more like something out of Stranger Things than anything in real life. It’s a fantastically cool image, but again, not useful in this context. Here’s another “amoeba”:

Also very much not an amoeba, but it’s a great monster idea! This would make a good Infected Mushroom album cover. Once again, it’s a great image, but I have no use for it, and it’s nowhere near what I wanted.

What about tardigrades? Those are almost pop culture these days, surely the AI can draw that…

Or maybe not.

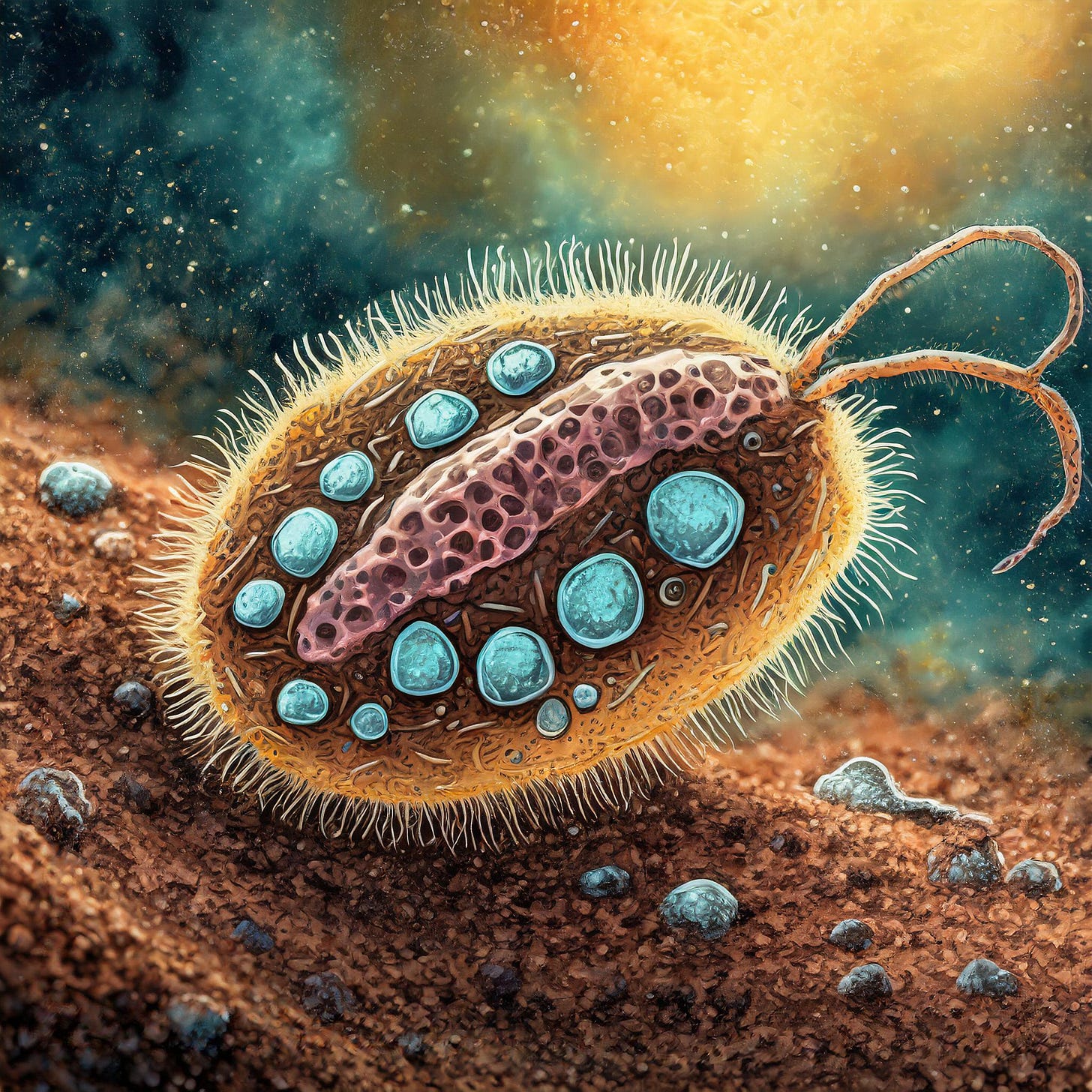

Okay, so specific microorganisms clearly don’t work, and it doesn’t seem to know what protozoa are at all. What about bacteria?

These aren’t bad if you just want generic microbe-ish filler images. Some are quite nice. I like the aesthetic, and while some of the shapes look more fungal than bacterial, I would say these do get the basic idea across. I can see someone using them to break up text in an article or blog post, as long as they don’t care about accuracy.

Let’s say I want to be more specific and create an image of bacteria in compost to go with an article on that subject:

There is some accuracy here. The substrate does look like compost. It’s a rich brown organic material with a mix of coarse and fine particles, and I’m surprised by how much some of those pieces really do look like bits of decomposing plant material. But the bacteria are very clearly learned from false-coloured SEM images. The AI doesn’t understand that those are unrealistic colours; it just takes everything it’s given as truth. If you do a Google image search for “bacteria” you’ll see a lot of images using bright colours like this. As far as the AI is concerned, that’s how bacteria look.

What about other AI image generators?

This could just be a problem with Fooocus, so I tested Dall-E 3 (using Bing) and Adobe’s Firefly with some of the same prompts:

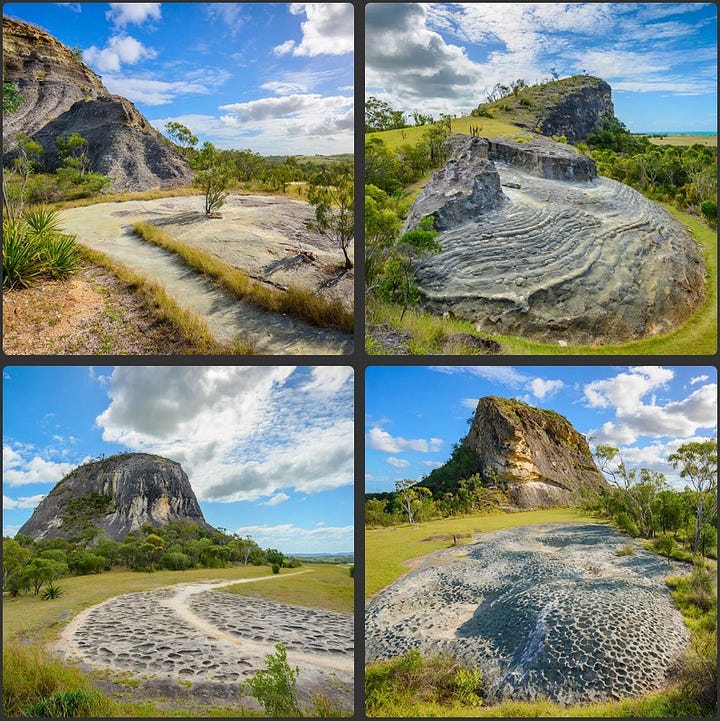

Protozoa in soil:

Now we’re getting somewhere! Nothing here is identifiable, but at least they look like protozoa. These are significantly closer to something useful, and if one doesn’t care about accuracy I can see them working as filler images in some context.

Amoeba:

Tardigrade:

Dall-E 3 clearly knows what a tardigrade is, at least vaguely, but Firefly has definitely not seen one before. I’m guessing the difference here has to do with which images these models were trained on. Adobe has made efforts to be more ethical with their image sourcing, which likely means their training data is more limited than what Dall-E 3 has had access to.

It’s possible to give Firefly an image to use as a style reference, so I decided to help it out with one of my own tardigrade illustrations and see if that would make a difference.

Of course, this is only a style reference, so the AI tries to draw the prompt in a way that looks similar my artwork, but doesn’t learn anything about what the image is. We can see the style influence, but it still doesn’t know what a tardigrade is, so that didn’t help.

Why isn’t this working?

I’m no expert on how AI image generation works, but I have a general idea of the process which might help explain what’s going on here. Models are trained using vast collections of images with words describing them, and from that they learn what things look like. When a user types in a prompt, the AI generates a new image using its knowledge of how things look.

AI doesn’t actually understand what things are, it just memorizes how they look.

It makes sense that AI will be better at drawing things that it has seen more of, so if we consider what’s common to see on the internet we can predict what kind of images it will be better or worse at generating. Human faces and cats are pretty common subjects and these come out decently most of the time. Microscopy, on the other hand, is a very niche subject.

These generated pictures of people using microscopes demonstrate this perfectly. The humans look pretty good (despite some weirdness if you look at them for too long), but the microscopes make absolutely no sense. The AI has clearly seen microscopes since it can draw specific parts very well, but it hasn’t seen enough pictures of them in different contexts to be able to assemble a believable image of one by itself.

When it comes to drawing microbes, there are two major problems for AI. One is the lack of reference imagery, which is a problem for me as well. There just aren’t many pictures of microbes out there to learn from. The other is that all images of microbes we do have are a little bit “wrong”, and can be very misleading if you don’t understand what you’re looking at.

This episode of Journey to the Microcosmos explains why:

Basically, all imaging techniques used to see microbes have major limitations. Each method only gives partial information, which means two pictures of the same organism can look so different it’s hard to tell they are actually the same thing. Even when using the same technique, one picture may show the upper surface of a cell, and another shows the interior. It’s hard to make sense of this as a human too.

When I want to learn how a microorganism looks, I search for as many images of it as I can find and try to form a complete picture in my mind. This process is similar to how the AI works, but there is a very significant difference: understanding.

While doing this research, it’s important to know something about the different imaging techniques in order to understand which pieces of the puzzle I’m looking at. For example, I know light microscopes have a narrow depth of field, so the images come out looking very flat. Most microorganisms are not flat like paper, so I try to find videos or pictures that include different angles.

Since I want to portray microbes as wildlife in nature, I usually don’t want to draw them from the top-down perspective we see in microscopes. It’s challenging to work out the 3D shape of microorganisms using only videos or photos recorded by someone else, because I often can’t be sure what plane the person using the microscope is focused on, and it’s hard to keep track of which direction the focus is shifting when they adjust it. Sometimes I can’t quite tell if we’re currently looking at the top or bottom of an organism, for example, and if the focus is being adjusted while the organism is also moving or rolling around, some important details might not fully come into focus at all. That makes it difficult to know where exactly a specific feature like a mouth opening is on a cell, or where a prominent groove starts and ends. It’s easier if I can view it in my own microscope, but that’s not always possible or feasible.

In order to work all of this out, I try to learn more about the organism in other ways. I look for research papers with descriptions and diagrams, for example, that can help make sense of the microscope images. Scanning Electron Microscopy (SEM) provides incredibly detailed images with depth that give more insight into the 3D form, but this technique requires extensive preparation which includes killing and preserving the specimen, and that can affect how it looks in the final image. SEM images can be misleading for my purposes, so I have to keep that in mind when I do study them. The images only show the surface topography and any colours have been manually applied in editing. It’s clear that Dall-E 3 (and myself back in 2018) learned about the 3D shape of a tardigrade by studying SEM images of them, and didn’t realize that a tardigrade is actually translucent like a jellyfish. SEM images are also very limited in availability. Someone in a well-equipped lab somewhere had to have done this process with the specific organism I’m interested in, and make the images available to view online.

While doing this research, I try to imagine how it all fits together. The results are my best approximation of how the organisms would look if we could view them with our own eyes in their natural habitat. Since currently available AI models aren’t capable of understanding things in this way, they simply can’t put together convincing or accurate illustrations of microbes.

And speaking of habitat, the AI would also need to understand how to place an organism in an environmental context that makes sense. Microscopy requires us to take organisms out of their natural context, so the AI wouldn’t get that information from any microscope photos it was trained on.

Of course we can simply tell it where the creature lives, but if the prompt says “An amoeba in soil”, it needs to understand that this should be a microscopic perspective of soil, and not simply use what it has learned from normal photos of soil. Regular photos of soil very often have plants and mushrooms in them, so the AI would need to know that these features wouldn’t be visible on a microscopic level. To a microbe, a plant or mushroom is just a massive wall of cells. Microscope photos of soil have the same problem as photos of microbes and require the same kind of thinking and knowledge of the world in order to produce useful results. For example, it would need to understand that microscope photos tend to have a “blank” background only because the sample is spread out on a slide; not because microorganisms live in a weird void with no landscape.

What these AI models can do is impressive, but still has major limitations, especially when it comes to subjects like this. Even if the currently available AI image generators were provided with billions of accurately labeled microscope images, I think they would still produce weird results because they don’t understand the subjects. Unless someone finds a reason to specifically train an AI for this, the images will always look like a nonsensical mashup of different microscopy methods.

We should never say never when it comes to tech, but I find it unlikely that any person or company would find it worthwhile to make an AI that can do this.

I don’t think it’s the end for me… yet

AI definitely shook my confidence in 2023. It didn’t help that I had a painfully slow year, although there’s no way to know if AI had anything to do with that. Even if it did, experimenting with AI art generators has been more reassuring than I expected. Sometimes it does help to face your fears head on.

While I can see how generative AI could be useful in certain contexts, and I do see how it can take away career opportunities, it’s more limited than the hype would lead us to believe. People and companies may have stopped or reduced hiring human artists in favour of AI because it’s the hot new thing, but they might soon realize it’s not quite as magical as they first thought. Even with generic stock photo subjects they constantly make mistakes and need significant corrections because they simply don’t understand anything. Some will lower their standards and use the junk images simply because it’s fast and cheap, or free (I do worry about how this could impact the industry overall). Others might get tired of struggling to get the bots to understand what they want and seek out the real world experience of human artists again.

Natural intelligence still has a place, at least for now.

AI could be useful for creative brainstorming and experimenting with ideas. I really liked some of the images above, even though they aren’t directly useful for anything. It’s fun to play with these things, but it’s a very short lived fun with no real substance, like cotton candy.

What about using it in my workflow? I keep hearing that AI will help artists work faster or more efficiently, but handing any part of the process over to AI would rob me of the experience I need in order to improve my own skills. If you want to know more about my process, you can check out this post on my main website.

In the long term, I can see AI art generators settling into the industry as new kind of artist to compete with, or a set of tools to use in certain contexts. They won’t replace all human artists, but many will need to adapt and learn to use them or find a new path entirely. Although, every creative industry is now facing this issue, so finding a new path is easier said than done.

As for my career, I think it’s mostly a non issue. It’s a tough time to be an artist for so many reasons, but I don’t think my career will end specifically because of AI art. I think it would take true AGI (Artificial General Intelligence) to produce convincing illustrations of microorganisms without specialized training. If we reach a point where artificial intelligence is that strong, then we have bigger things to worry about than niche artists like me running out of work.

What do you think?

I would love to hear your thoughts on all this. What did you think of the images in the post? Would you use them? I’m curious to know what you think about AI art in general too, especially now that it’s been around for a little while and the hype has calmed down a bit. Have you experimented with AI tools? Have you found them useful in your own work in some way?

I can’t wait to read your comments. Thank you so much for being here!

All the best,

Kate

What an interesting post! The AI images look pretty neat honestly, but it looks very… well… AI. I’m glad I found your Substack, your art is beautiful!

Super interesting. The examples were very helpful. I'm glad to see that your work is still safe for now!